Kubernetes is a platform for managing containers across

multiple hosts. It provides lots of management features for container-oriented applications,

such as auto-scaling, rolling deployments, compute resources, HA and storage

management. Like containers, it's designed to run anywhere, including on bare

metal, in our data center, in the public cloud, or even in the hybrid cloud.

Deployment in Kubernetes ensures that a certain number

of groups of containers are up and running.

Azure Kubernetes Service (AKS) makes it simple to deploy a

managed Kubernetes cluster in Azure. AKS reduces the complexity and operational

overhead of managing Kubernetes by offloading much of that responsibility to

Azure.

As a hosted Kubernetes service, Azure handles critical tasks

like health monitoring and maintenance for you. The Kubernetes masters are

managed by Azure. You only manage and maintain the agent nodes.

As a managed Kubernetes service, AKS is free - you only pay

for the agent nodes within your clusters, not for the masters.

There are various ways you can deploy the AKS cluster, in

this post we will be deploying through portal and sharing the azure cmd cli and

Terraform code as well.

For improved security and management, AKS lets you integrate

with Azure Active Directory and use Kubernetes role-based access controls. You

can also monitor the health of your cluster and resources.

Navigate >> to Kubernetes Service >> Click on it and follow screen shots :-

Above is the first page of AKS deployment where we would fill in the basic settings like -

- Project details - Select an Azure subscription, then select or create an Azure resource group.

- Cluster Details - Select a region, Kubernetes version, and DNS name prefix for the AKS cluster.

- Select a VM size for the AKS nodes. The VM size cannot be changed once an AKS cluster has been deployed.

- Select the number of nodes to deploy into the cluster, I selected -1 as it is for testing purpose.

Next click on scale & keep the default options & click on Authentication

Authentication page, configure the following options:

- Enable the option for Kubernetes role-based access controls (RBAC). This will provide more fine-grained control over access to the Kubernetes resources deployed in your AKS cluster.

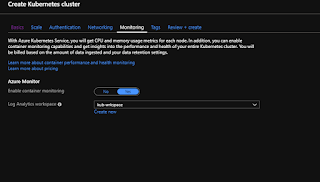

Click for Networking ,

By default, Basic networking is used, and Azure Monitor for containers is enabled.

Click Review + create and then Create when validation completes.

Once you deploy it would take few minutes and it would be deploying all the relevant things after creating Master mode and worker node.

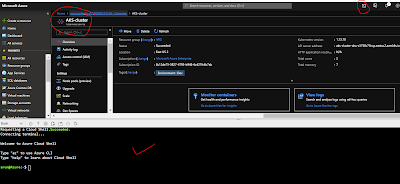

kubectl client is pre-installed in the Azure Cloud Shell.>_ button on the top of the Azure portal. as shown below along with the AKS overview -

To configure

kubectl to connect to your Kubernetes cluster, use the az aks get-credentials command. This command downloads credentials and configures the Kubernetes CLI to use them.

az aks get-credentials --resource-group ResourceGroup --name AKSCluster

To verify the connection to your cluster, use the kubectl get command to return a list of the cluster nodes.

kubectl get nodes

Below is the commands that i ran after creation of the cluster , plz check -

Now we have seen the deployment of AKS and few basic things about kubernetes and how to connect and check the nodes. Lets check more things regarding AKS -

**AKS Supports RBAC & RBAC lets you control access to Kubernetes resources and namespaces, and permissions to those resources

**You can also configure an AKS cluster to integrate with Azure Active

Directory (AD). With Azure AD integration, Kubernetes access can be

configured based on existing identity and group membership.

**Azure Monitor for container health collects memory and processor metrics

from containers, nodes, and controllers. Container logs are available,

and you can also review the Kubernetes master logs.

**Cluster node and Pod scalling -You can use both the horizontal pod autoscaler or the cluster

autoscaler. This approach to scaling lets the AKS cluster automatically

adjust to demands and only run the resources needed.

**Cluster node upgrades - Azure Kubernetes Service offers multiple Kubernetes versions. As new

versions become available in AKS, your cluster can be upgraded using the

Azure portal or Azure CLI. During the upgrade process, nodes are

carefully cordoned and drained to minimize disruption to running

applications.

**Storage Volume support -To support application workloads, you can mount storage volumes for persistent data. Both static and dynamic volumes can be used backed by either Azure Disks for single pod access, or Azure Files for multiple concurrent pod access.

**Network settings - An AKS cluster can be deployed into an existing virtual network. In this

configuration, every pod in the cluster is assigned an IP address in

the virtual network, and can directly communicate with other pods in the

cluster, and other nodes in the virtual network. Pods can connect also

to other services in a peered virtual network, and to on-premises

networks over ExpressRoute or site-to-site (S2S) VPN connections.

**The HTTP application routing add-on makes it easy to access applications

deployed to your AKS cluster. When enabled, the HTTP application

routing solution configures an ingress controller in your AKS cluster.

As applications are deployed, publicly accessible DNS names are auto

configured. The HTTP application routing configures a DNS zone and

integrates it with the AKS cluster. You can then deploy Kubernetes

ingress resources as normal.

**AKS supports the Docker image format. For private storage of your Docker

images, you can integrate AKS with Azure Container Registry (ACR).