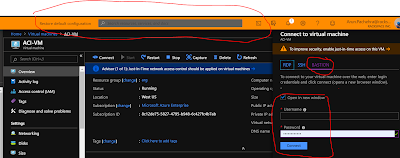

Yesterday there was one scenario where my customer asked me to attach a managed disk to the AzureVM and when i oped the portal thought its just a single VM and click on the add disk , i did not get any option to create and attach the managed disk.

When i couldnt do it from Portal i thought lets try with Powershell. I created all the cmdlets and ran it and then absolute clear Error ever come up on my scree stating

" Managed disk not supported on Unmanaged OS disk & Vice Versa"

So yes this is not possible , Now seems like very short post , ok lets see few difference between Managed and unmanaged disk

Essentially, Managed Disks are easier to use because they don't require you to create a storage account. The benefit of not having to manage a storage account is that storage accounts have limits, like max IOPS, so that if you place too many disks in a storage account, it is possible that you will reach the IOPS limit. (500 * 40 = 20000 IOPS which is provided by one storage account so not more then 40 disks/ storage account )

if you have VMs in an Availability Set, Azure will make sure that disks are on different "stamps" ensuring that disks are spread out so that you don't have a single point of failure for the Managed disks.

When taking snapshots of managed disk they are Full Snapshots, not incremental, so this adds to storage cost.

Managed disk only supports LRS.

When i couldnt do it from Portal i thought lets try with Powershell. I created all the cmdlets and ran it and then absolute clear Error ever come up on my scree stating

" Managed disk not supported on Unmanaged OS disk & Vice Versa"

So yes this is not possible , Now seems like very short post , ok lets see few difference between Managed and unmanaged disk

Essentially, Managed Disks are easier to use because they don't require you to create a storage account. The benefit of not having to manage a storage account is that storage accounts have limits, like max IOPS, so that if you place too many disks in a storage account, it is possible that you will reach the IOPS limit. (500 * 40 = 20000 IOPS which is provided by one storage account so not more then 40 disks/ storage account )

if you have VMs in an Availability Set, Azure will make sure that disks are on different "stamps" ensuring that disks are spread out so that you don't have a single point of failure for the Managed disks.

When taking snapshots of managed disk they are Full Snapshots, not incremental, so this adds to storage cost.

Managed disk only supports LRS.