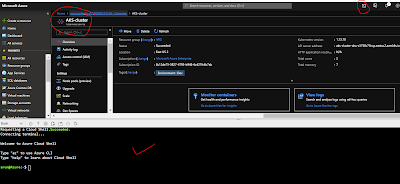

We have already created the AKS cluster successfully in last post and in this post we will utilize the same AKS-cluster and deploy Azure Vote app. Please follow the below screenshots and commands -

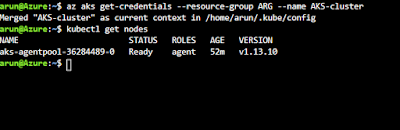

First lets get the Azure Cli running and get the clusters credentials and check nodes just for the confirmation as everything is running as expected as shown below -

az aks get-credentials --resource-group ResourceGroup --name AKSCluster

kubectl get nodes

Kubernetes manifest file defines a desired state for the cluster, such as what container images to run & will be using the manifest file for Azure Vote app and it would deploy all the relevant components.

Lest open the vi or nano editor to create azure-vote.yaml and copy the below definition:

Ref link - https://docs.microsoft.com/en-us/azure/aks/kubernetes-walkthrough-portal

apiVersion: apps/v1

kind: Deployment

metadata:

name: azure-vote-back

spec:

replicas: 1

selector:

matchLabels:

app: azure-vote-back

template:

metadata:

labels:

app: azure-vote-back

spec:

nodeSelector:

"beta.kubernetes.io/os": linux

containers:

- name: azure-vote-back

image: redis

resources:

requests:

cpu: 100m

memory: 128Mi

limits:

cpu: 250m

memory: 256Mi

ports:

- containerPort: 6379

name: redis

---

apiVersion: v1

kind: Service

metadata:

name: azure-vote-back

spec:

ports:

- port: 6379

selector:

app: azure-vote-back

---

apiVersion: apps/v1

kind: Deployment

metadata:

name: azure-vote-front

spec:

replicas: 1

selector:

matchLabels:

app: azure-vote-front

template:

metadata:

labels:

app: azure-vote-front

spec:

nodeSelector:

"beta.kubernetes.io/os": linux

containers:

- name: azure-vote-front

image: microsoft/azure-vote-front:v1

resources:

requests:

cpu: 100m

memory: 128Mi

limits:

cpu: 250m

memory: 256Mi

ports:

- containerPort: 80

env:

- name: REDIS

value: "azure-vote-back"

---

apiVersion: v1

kind: Service

metadata:

name: azure-vote-front

spec:

type: LoadBalancer

ports:

- port: 80

selector:

app: azure-vote-front

Once the file is saved run the below command for the deployment :

kubectl apply -f azure-vote.yaml

When the application runs, a Kubernetes service exposes the application front end to the internet. This process can take a few minutes to complete.

To monitor progress, use the kubectl get service command with the

kubectl get service azure-vote-front --watch

Below you can see how it ran on cloud cli -

First lets get the Azure Cli running and get the clusters credentials and check nodes just for the confirmation as everything is running as expected as shown below -

az aks get-credentials --resource-group ResourceGroup --name AKSCluster

kubectl get nodes

Kubernetes manifest file defines a desired state for the cluster, such as what container images to run & will be using the manifest file for Azure Vote app and it would deploy all the relevant components.

Lest open the vi or nano editor to create azure-vote.yaml and copy the below definition:

Ref link - https://docs.microsoft.com/en-us/azure/aks/kubernetes-walkthrough-portal

apiVersion: apps/v1

kind: Deployment

metadata:

name: azure-vote-back

spec:

replicas: 1

selector:

matchLabels:

app: azure-vote-back

template:

metadata:

labels:

app: azure-vote-back

spec:

nodeSelector:

"beta.kubernetes.io/os": linux

containers:

- name: azure-vote-back

image: redis

resources:

requests:

cpu: 100m

memory: 128Mi

limits:

cpu: 250m

memory: 256Mi

ports:

- containerPort: 6379

name: redis

---

apiVersion: v1

kind: Service

metadata:

name: azure-vote-back

spec:

ports:

- port: 6379

selector:

app: azure-vote-back

---

apiVersion: apps/v1

kind: Deployment

metadata:

name: azure-vote-front

spec:

replicas: 1

selector:

matchLabels:

app: azure-vote-front

template:

metadata:

labels:

app: azure-vote-front

spec:

nodeSelector:

"beta.kubernetes.io/os": linux

containers:

- name: azure-vote-front

image: microsoft/azure-vote-front:v1

resources:

requests:

cpu: 100m

memory: 128Mi

limits:

cpu: 250m

memory: 256Mi

ports:

- containerPort: 80

env:

- name: REDIS

value: "azure-vote-back"

---

apiVersion: v1

kind: Service

metadata:

name: azure-vote-front

spec:

type: LoadBalancer

ports:

- port: 80

selector:

app: azure-vote-front

Once the file is saved run the below command for the deployment :

kubectl apply -f azure-vote.yaml

When the application runs, a Kubernetes service exposes the application front end to the internet. This process can take a few minutes to complete.

To monitor progress, use the kubectl get service command with the

--watch argument.kubectl get service azure-vote-front --watch

Below you can see how it ran on cloud cli -

Now you can see the internal and external IP address for Azure-Vote application and when you try to browse the public IP address it would show you the app where you can vote for cat and dogs as shown :-

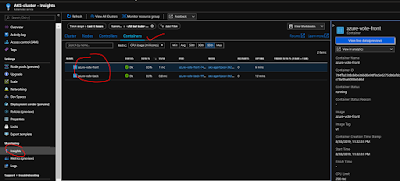

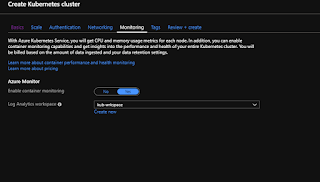

Monitor heath and logs : When you created the cluster, Azure Monitor for containers was enabled.

This monitoring feature provides health metrics for both the AKS cluster

and pods running on the cluster. Navigate to the cluster and follow the below instruction as shown below as well

- Under Monitoring on the left-hand side, choose Insights

- Across the top, choose to + Add Filter

- Select Namespace as the property, then choose <All but kube-system>

- Choose to view the Containers.

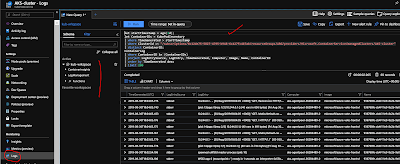

To see logs for the

azure-vote-front pod, select the View container logs link on the right-hand side of the containers list. These logs include the stdout and stderr streams from the container.